Developing a Backend Application with ZenWave SDK

Converting your ZDL models into a Spring Boot/Spring Cloud backend application with ZenWave SDK.

The term 'model' has a dual meaning in DDD: it's a representation of a concept in the problem space and a representation of a concept in the solution space. - Eric Evans in Domain Driven Design

ZenWave Domain Model Language (ZDL) is a lightweight, developer-friendly DSL grounded in DDD and API-First principles. This DSL serves as an ubiquitous language, enabling teams to think, discuss, and collaborate effectively on software design... And then use a fully customizable set of plugins to generate all the boring staff that is already present in the model.

ZenWave SDK plugins allows you to convert your ZDL models into a complete backend application through a series of customizable plugins.

In this section we will guide you into the process of modeling your application into ZDL models and using ZenWave SDK plugins to generate the building blocks you can assemble to create your backend application.

Project structure

By default, ZenWaveSDK generates a complete SpringBoot backend application following industry best practices, with a minimal dependency set: Spring-Data JPA and MongoDB, SpringMVC, SpringCloudStreams, MapStruct and optional Lombok integration.

It follows a pragmatic hexagonal/clean architecture with:

- A central

corepackage containing domain entities and aggregates inbound(primary ports) andoutbound(secondary ports) interfaces- and the internal

implementationofinboundinterfaces, which uses theoutboundinterfaces to interact with external services.

📦 {{basePackage}}��📦 adapters└─ web| └─ RestControllers (spring mvc)└─ events└─ *EventListeners (spring-cloud-streams)📦 core├─ 📦 domain| └─ (entities and aggregates)├─ 📦 inbound| ├─ dtos/| └─ ServiceInterface (inbound service interface)├─ 📦 outbound| ├─ mongodb| | └─ *RepositoryInterface (spring-data interface)| └─ jpa| └─ *RepositoryInterface (spring-data interface)└─ 📦 implementation├─ mappers/└─ ServiceImplementation (inbound service implementation)📦 infrastructure├─ mongodb| └─ CustomRepositoryImpl (spring-data custom implementation)└─ jpa└─ CustomRepositoryImpl (spring-data custom implementation)

This is just the default project structure. You can choose from CleanHexagonal, Layered, SimpleDomain, HexagonalArchitecture, CleanArchitecture or create your own. See Customizing Generated Code for more details.

Start Modeling with ZDL

You can find a full example of a ZenWave SDK generated application in the Customer Master Data Service (JPA) example.

Depending on your particular use case you have different approaches to start modeling with ZDL:

- Entities and Aggregates: Traditionally you would start your application modeling your data.

- Services and Commands: You can also start modeling the external behaviour of your application, describing which endpoints it exposes and which commands it listens to.

- Events: If you came from a big-picture exploratory Event-Storming you can start describing the events and the commands that trigger them.

We will start with the first approach: describing the data model, then the behaviour and last the domain events emitted to outside world, but you can use any of the other two options to start modeling your application.

Entities and Aggregates

So we start describing our data model with the entity keyword.

Adding some fields with fieldName, fieldType, validations and javadoc comments.

These javadoc comments can either precede the field definition on a separate line, typical Java convention, or be appended to the end of the line where the field is defined, which is a departure from the typical Java convention but is very handy for small comments.

For field types, you can use any valid type in java.lang, java.math.*, java.time.* or java.util.*, or reference any other entity or enum. But only these field types were tested.

Field types can also be arrays of the above types or references, but direct references and arrays only work with Mongodb, for JPA you will need to configure OneToOne, ManyToOne, ManyToOne, ManyToMany... relationships, and direct references will work only in places where JPA @Embeddable is allowed.

@aggregate@auditingentity Customer {firstName String required minlength(2) maxlength(250) /** first name javadoc comment */lastName String required minlength(2) maxlength(250) /** last name javadoc comment */email String required unique /** email javadoc comment */phone String required/*** address is a direct reference, which only works in mongodb (not JPA)*/addresses Address[]}@embeddedentity Address {identifier String required /** Description identifier for this Address */street String requiredcity Stringstate Stringzip Stringtype AddressType required /** address type is an enum */}enum AddressType { HOME(1) /** home description */, WORK(1) /** work description */ }

Special annotations

ZDL Language allows any annotation name on entities and fields, it's the job of ZenWave Plugins to interpret those annotations and generate code accordingly.

The following annotations are special and will be interpreted by ZenWave SDK Backend Application Plugin in regard to entities and enums. They control aspects of generated code regarding persistence...

| Annotation | Entity | Id/Version | createdDate/By | lastModifiedDate/By | Persistence | Repository |

|---|---|---|---|---|---|---|

| <entity> | yes | yes | yes | |||

| @aggregate | yes | yes | yes | yes | ||

| @embedded | yes | yes | ||||

| @auditing | yes | yes | yes | |||

| @skip | no | |||||

| @abstract | yes | no |

The most important ones are @aggregate, @embedded, @copy, @extends and @abstract.

@aggregate marks an entity as an aggregate root. Aggregate entities will have Spring Data Repository interfaces generated for them, which will be included as dependencies in you Service Implementations.

@embedded entities do not contain id and version fields and will not have a Spring Data Repository interface generated for them.

For @auditing entities it will add createdDate/By, lastModifiedDate/By fields to the entity. Usually you will use this annotation in your aggregate root entities.

With @vo it will generate a Java object without id, version and persistence annotations in the same package as persistent entities.

You can use @skip for entities you are just using to @copyproperties.

You can also reuse entity definitions or create a class hierarchy using @copy and @extends annotations, and mark some entities as @abstract.

entity BaseEntity {someField String}@copy(BaseEntity)entity EntityA {// will contain all the fields from BaseEntity}@extend(BaseEntity)entity EntityB {// will extend BaseEntity}

While @extend will create a class hierarchy, @copy will copy all the fields from the referenced entity into the current entity and can be used when you need to reuse fields in entities that belong to different packages (like domain, inputs, outputs and events)

@skipentity BaseEntity {someField String}@copy(BaseEntity)input InputA {// an input can not extend and entity because is not an accesible package}

Entity fields annotated with @transient will be annotated with the appropriate @Transient persistence annotation in the generated code.

Services Commands

Services bundle together the operations that your aggregates expose to the outside world. They are the entry point to the core, innermost layer, or hexagon of your application.

In a Domain-Driven Design (DDD) sense, is the combination of entities annotated with @aggregate and the associated service commands collectively what form an Aggregate. Encapsulating a cluster of domain objects that can be treated as a transactional single unit, ensuring consistency and enforcing business rules.

This combination is sometimes referred as the Anemic Domain Model. We call it Separation of Concerns, clearly separating where data is defined and the business process you apply to it, and it works just fine for most microservice applications.

Services are still the only entry point for your aggregates functionality, and this programming model greatly simplifies how to interact with external dependencies such as persistence, APIs and publishing domain events to an external broker.

Services Section in ZDL Reference provides more details on the syntax. This section will provide you guidance on how to use them.

@aggregateentity Customer {}service CustomerService for (Customer) {/* the following service method names and signatures will genereate CRUD operations */createCustomer(Customer) Customer withEvents CustomerEventupdateCustomer(id, Customer) Customer? withEvents CustomerEventdeleteCustomer(id) withEvents CustomerEventgetCustomer(id) Customer?@paginatedlistCustomers() Customer[]/* this is will generate (almos) empty implementation placeholders for service and tests */updateCustomerAddress(id, Address) Customer? withEvents CustomerEvent// This will generate two methods: 'longRunningOperation' and 'longRunningOperationSync'@async("executorName")longRunningOperation(id) withEvents CustomerEvent}

Service commands resemble but should not be confused wit java methods. They support only two types of parameters:

- id: the presence of this parameter specifies that this command operates on an aggregate entity instance with the given id.

- CommandInput: it can point to an

entityorinputtype, and it's used to pass data to the command.

CommandOutput can be any valid entity or output type, or be unspecified. If CommandOutput is an entity, it expresses that entity will be created or updated with the command input data. CommandOutput can be marked as optional with a ? to express that the command may not return any output.

withEvents is used to specify the domain events that will be published after the command is executed. It can be a single event, a space separated list of events or two or more alternative events.

service CustomerService for (Customer) {someCustomerCommand(id, Customer) Customer? withEvents CustomerEvent [AlternativeCustomerEvent|AnotherCustomerEvent]}

Java signature for CommandInput can be expanded using an @inline annotation in the input type definition:

@inlineinput CustomerFilter {firstName StringlastName Stringemail String}service CustomerService for (Customer) {@paginatedsearchCustomers(CustomerFilter) Customer[] // this will produce the following Java method signature:// Page<Customer> searchCustomers(String firstName, String lastName, String email)}

Inputs and Outputs

You can directly use entity types as command parameters, or you can create specific input and output types for service commands parameters and return types.

Using ZenWave SDK Backend Plugin option inputDTOSuffix to a non-empty string, it will automatically create an input class for your entities, copying all the fields from each entity.

Also, if you use an entity as command parameter, and you don't set inputDTOSuffix, ZenWave SDK will still use a mapstruct mapper to copy parameter fields into persistent entity. So you are always in control of how you map your input data into your persistent entities.

@Transactionalpublic Customer createCustomer(Customer input) {log.debug("Request to save Customer: {}", input);// Customer parameter is an entity, but it will be copied into a persistent entity herevar customer = customerServiceMapper.update(new Customer(), input);customer = customerRepository.save(customer);return customer;}

If you need bigger flexibility you can just create your own input and output types.

input CustomerInput {firstName String required minlength(2) maxlength(250)lastName String required minlength(2) maxlength(250)email String required uniquephone String required}output CustomerOutput {firstName String required minlength(2) maxlength(250)lastName String required minlength(2) maxlength(250)email String required uniquephone String required}

If you need to create an enum in the input package, you can use the @input annotation.

@input // this will create an enum in the input packageenum CustomerStatusInput { ACTIVE, INACTIVE }

Exposing your Services to Outside World

Your services represent the core of your application and are not exposed by default to the outside world, through adapters or primary ports.

ZenWave proposed architecture is to use API-First to describe your adapters and primary ports interface.

You can still annotate your Service Commands with @rest and @asyncapiannotations for two different purposes:

- Document how each service command will be exposed to the outside world.

- Generate draft versions of AsyncAPI and OpenAPI from your ZDL models. Then you can generate code from those API-First specifications using ZenWave AsyncAPI and OpenAPI Plugins.

ZDL Language is very compact and concise for this, but remember that API-First definitions are the source of truth for outside communication.

With ZDLToAsyncAPIPlugin and ZDLToOpenAPIPlugin plugins you can create complete draft versions of AsyncAPI and OpenAPI specifications from your annotated Services and Events.

/*** Customer Service annotated for REST and AsyncAPI serves two purposes:* 1. Document how each service command will be exposed to the outside world.* 2. Generate draft versions of AsyncAPI and OpenAPI from your ZDL models.*/@rest("/customers")service CustomerService for (Customer) {@postcreateCustomer(Customer) Customer withEvents CustomerEvent@put("/{customerId}")updateCustomer(id, Customer) Customer? withEvents CustomerEvent@put("/{customerId}/address/{identifier}")updateCustomerAddress(id, AddressInput) Customer? withEvents CustomerEvent CustomerAddressUpdated@delete("/{customerId}")deleteCustomer(id) withEvents CustomerEvent@get("/{customerId}")getCustomer(id) Customer?@get({params: {search: "string"}}) @paginatedlistCustomers() Customer[]}@skip // skip generating this domain enum, it will genereate by asyncapi code generator.enum EventType { CREATED(1), UPDATED(1), DELETED(1) }@asyncapi({channel: "CustomerEventsChannel", topic: "customer.events"})event CustomerEvent {customerId StringeventType EventTypecustomer Customer}@asyncapi({channel: "CustomerAddressEventsChannel", topic: "customer.address-events"})event CustomerAddressUpdated {customerId StringaddressDescription StringoriginalAddress AddressnewAddress Address}

See Producing Domain Events, Consuming Async Commands and Events and Exposing a REST API for specific details on how to use ZenWave SDK Plugins to generate code for your adapters and primary ports.

Events

Events carry the information about relevant changes in your bounded context. They are meant to be published to outside world and documented through and API-First specification like AsyncAPI.

ZenWave SDK Backend Plugin can generate the code for publishing events as part of your service commands, but it does not generate code for the events data structures, event data models will be generated by ZenWave AsyncAPI Plugins.

AsyncAPI definitions are the source of truth for outside communication, still ZDL events work as a very compact and concise IDL to generate your AsyncAPI definition.

For instance the following definition will generate an AsyncAPI definition with:

@asyncapi({channel: "OrderUpdatesChannel", topic: "orders.order_updates"})event OrderStatusUpdated {id StringdateTime Instant requiredstatus OrderStatus requiredpreviousStatus OrderStatus}

- An

schemanamedOrderStatusUpdatedwith apayloadcontaining theid,dateTime,statusandpreviousStatusfields. - A

messagenamedOrderStatusUpdatedMessagepointing toOrderStatusUpdatedschema. - An a

ChannelnamedOrderUpdatesChannelcontaining a reference to theOrderStatusUpdatedMessagemessage. - It also will generate an

OperationnamedonOrderStatusUpdatedwith and actionsendto theOrderUpdatesChannelchannel.

This is as a compact format as it can get!! Saving you a lot of typing and giving you very concise representation of your events.

NOTE: you still need to add this event to a serviceCommand to generate the asyncapi definition for it.

service OrdersService for (CustomerOrder) {// only emited events will be included in the asyncapi definitionupdateOrder(id, CustomerOrderInput) CustomerOrder withEvents OrderStatusUpdated}

Including Event Publishing Code

Because this depends on ZenWave AsyncAPI Plugins naming conventions, you need to explicitly set includeEmitEventsImplementation to true in ZenWave SDK Backend Plugin option to include event publishing code in your core services.

// This will only be generated if includeEmitEventsImplementation is set to trueprivate final EventsMapper eventsMapper = EventsMapper.INSTANCE;private final ICustomerEventsProducer eventsProducer;@Transactionalpublic Customer createCustomer(Customer input) {log.debug("Request to save Customer: {}", input);var customer = customerServiceMapper.update(new Customer(), input);customer = customerRepository.save(customer);// This will only be generated if includeEmitEventsImplementation is set to truevar customerEvent = eventsMapper.asCustomerEvent(customer);eventsProducer.onCustomerEvent(customerEvent);return customer;}

Generating Code with CLI or IntelliJ Plugin

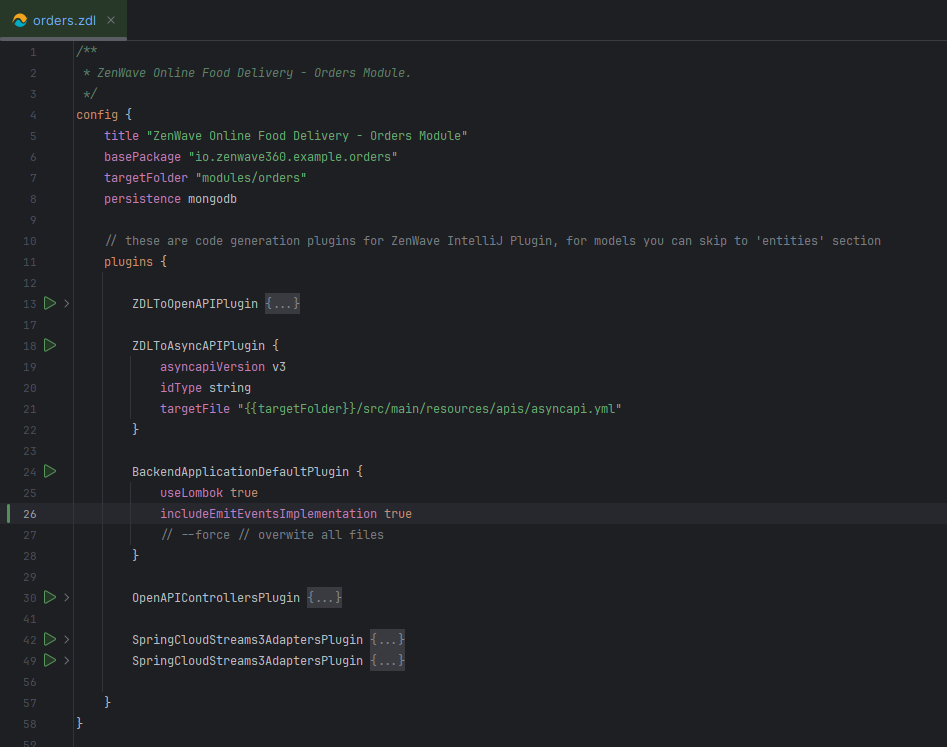

Now you can ZenWave SDK BackendApplicationDefaultPlugin plugin to generate your backend application.

From the command line using JBang:

jbang zw -p BackendApplicationDefaultPlugin \zdlFile=orders.zdl \basePackage="io.zenwave360.example.orders" \persistence=mongodb \useLombok=true \includeEmitEventsImplementation=true \targetFolder="modules/orders"

Or using ZenWave Model Editor in IntelliJ IDEA: